Benefits and Limits of AI in Test Automation: A Balanced View

Everyone is talking about AI in test automation right now. Some treat it like a silver bullet that will wipe out manual testing overnight. Others roll their eyes and call it overhyped. The truth is somewhere in the middle. AI can make testing faster, smarter, and less painful—but it also has blind spots. To get value out of it, you need to see both sides clearly.

AI in Test Automation: What It Is and Isn’t

Let’s get one thing straight: AI doesn’t “know” your software. It doesn’t care about your business goals or your customers’ patience. What it does is pattern work.

AI QA tools take inputs—user flows, plain text instructions, error logs and spit out something you can test. They’re good at repetition. They can generate tests, fix broken selectors, and highlight risks faster than any human could. That’s the power of AI in software test automation.

But it’s not wisdom. AI QA tools won’t tell you if your checkout flow feels clunky. It won’t warn you when a subtle UI bug will drive users mad. That judgment is still on you. The value of AI in test automation lies in the balance: machines speed things up, humans make sense of it. Together, you cover more ground without drowning in manual work.

Tangible Benefits of AI in Test Automation

AI is already paying off. Surveys show nearly half of QA teams have cut more than 50% of their manual testing because of smarter automation. Efficiency is the headline benefit, but it’s not the only one. So what are the actual benefits?

✔ Faster test creation

Writing tests used to eat half your day. Now you drop instructions in plain English and watch them become runnable tests.

✔ Deeper coverage

AI doesn’t get stuck on the happy path. It throws in edge cases you probably wouldn’t think of.

✔ Less fragile tests

A tiny app change no longer wrecks your whole suite. AI tools adapt so you’re not constantly fixing the same scripts.

✔ Quicker debugging

No more cryptic error messages. Failures come with explanations that actually make sense.

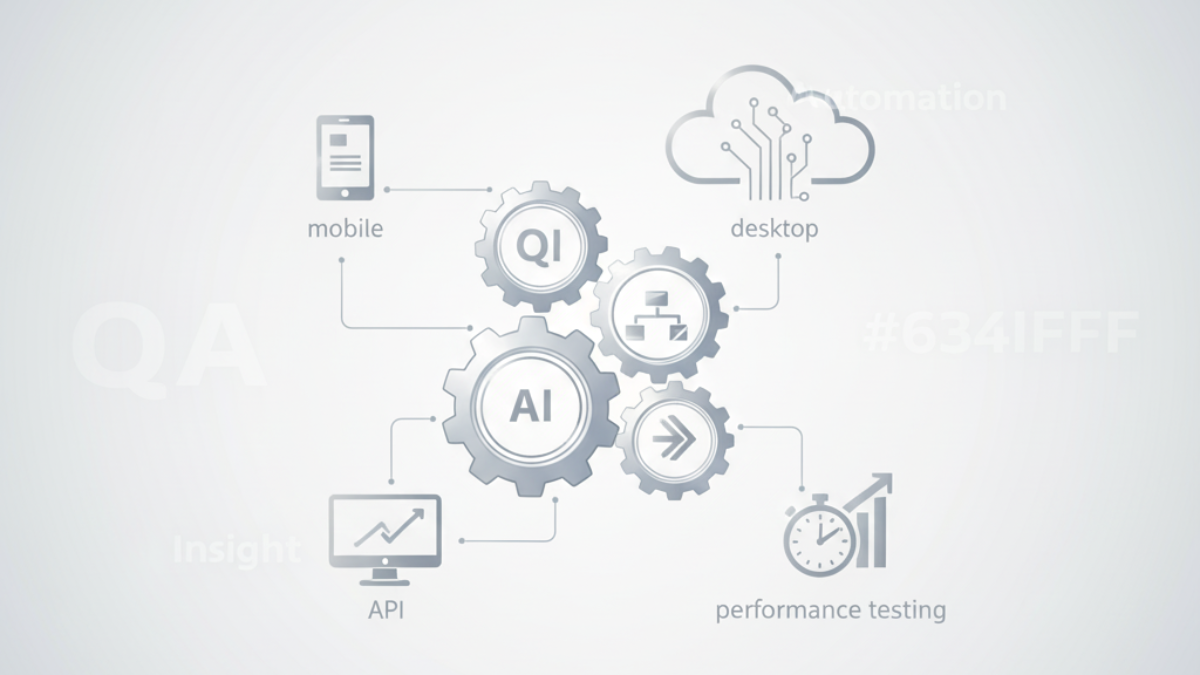

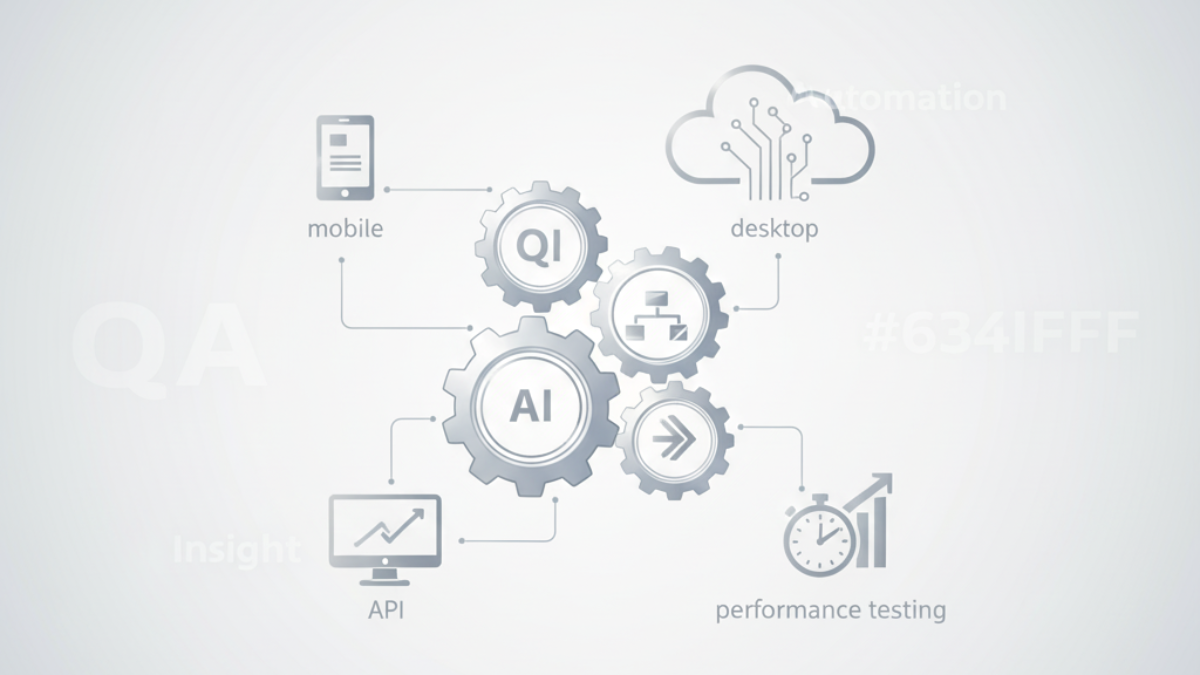

✔ Scaling across platforms

Web automation, mobile automation, and desktop automation—is handled in the same place. No tool juggling.

✔ Clearer insights

Test reporting points out performance issues and patterns without drowning you in raw data.

✔ Seamless workflows

Hook AI into CI/CD, project management, or flow control tools and stop bouncing between systems.

✔ Handling complexity

API testing, performance testing, IoT. The messy stuff you used to dread gets pulled into automation, too.

Key Limitations of AI Quality Assurance (QA) Tools

AI in test automation has some limits. If you expect it to think for you, you’ll end up with gaps you didn’t see coming. AI doesn’t understand your product’s story or your customer’s frustrations. It sees data, not meaning. Some of the downsides worth noting:

Context blindness: AI can flag broken flows, but can’t judge whether the flow makes sense to a human.

Heavy setup and training: To get the best out of AI QA tools, you still need people feeding them the right data and guiding them.

False positives and noise: Sometimes AI highlights issues that aren’t real problems, which can waste your time.

Limited creativity: Edge cases outside of learned patterns can slip by unnoticed.

Although these aren’t deal-breakers, they're just reminders. AI isn’t a substitute for testers who actually think.

Finding the Sweet Spot: When AI Shines & When It Doesn’t

The trick with AI in testing is knowing when to lean on it and when to trust your own judgment. Use it as muscle, not brain.

Where it shines:

-

Building and maintaining repetitive regression tests.

-

Regression testing across multiple platforms.

-

Processing huge volumes of test reporting data quickly.

-

Keeping pace with constant updates in a CI/CD pipeline.

Where it falls short:

-

Understanding user intent.

-

Evaluating design choices or usability.

-

Spotting rare edge cases that only come up in real-world use.

AI handles the grunt work. Testers handle the meaning. That’s the balance.

The Bottom Line

AI in test automation is a gift if you treat it like a partner. It speeds things up, covers more ground, and gives you better data to work with. But it doesn’t remove the need for human testers. The strongest teams are the ones who know how to mix the two.

If you want to see how AI-driven testing fits into modern workflows across web automation, API testing, performance testing, and more—explore AI QA tools like ZeuZ. See where it helps, and where you’ll still want a tester’s eyes on the screen.