How AI Visual Testing Detects UI Bugs with Pixel‑Level Precision

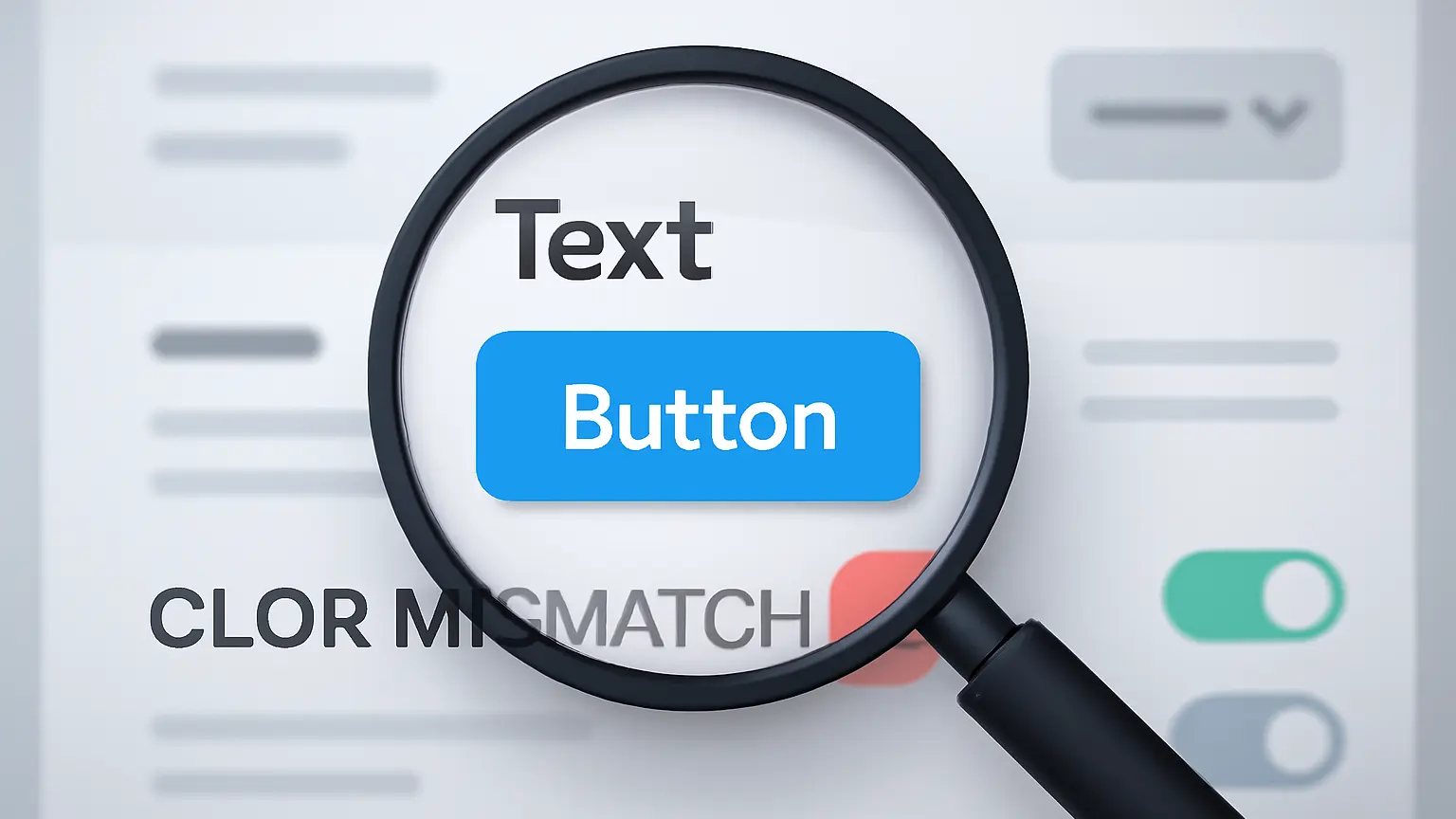

AI visual testing is becoming a non-negotiable part of automated user testing. Why? Because User interfaces don’t break loudly. They break quietly. A button shifts two pixels. A menu overlaps with text. The brand colours are slightly off. These details look small, but they chip away at trust, and AI is here to catch what humans often miss.

Why UI Consistency and Visual Accuracy Matter

Think about the last time you used an app that looked wrong. Maybe the button text was cut off. Or the alignment was slightly crooked. Tiny things. But those tiny things made you doubt whether the rest of the product worked properly. That’s how UI bugs work. They don’t always crash the app. They quietly tell the user: “This wasn’t tested.”

Consistency creates trust. You tap a button on mobile and expect the same experience on desktop. You open a feature in Chrome and assume it looks the same in Firefox. Break that rhythm once, and the illusion of reliability starts to crack.

The problem is that spotting these cracks at scale is brutal for humans. QA teams stare at screens for hours, but fatigue sets in. Developers focus on logic, not aesthetics. And yet, design drift happens with every update. Utilizing AI visual testing is the only solution for these issues. AI-powered testing runs with machine focus, scanning for subtle misalignments across devices and browsers that people would ignore or never even notice.

Recent surveys show nearly half of companies have already folded AI into their QA processes, and that number is climbing fast. The reason is simple: catching functional bugs is not enough anymore. Quality also means precision at the pixel level.

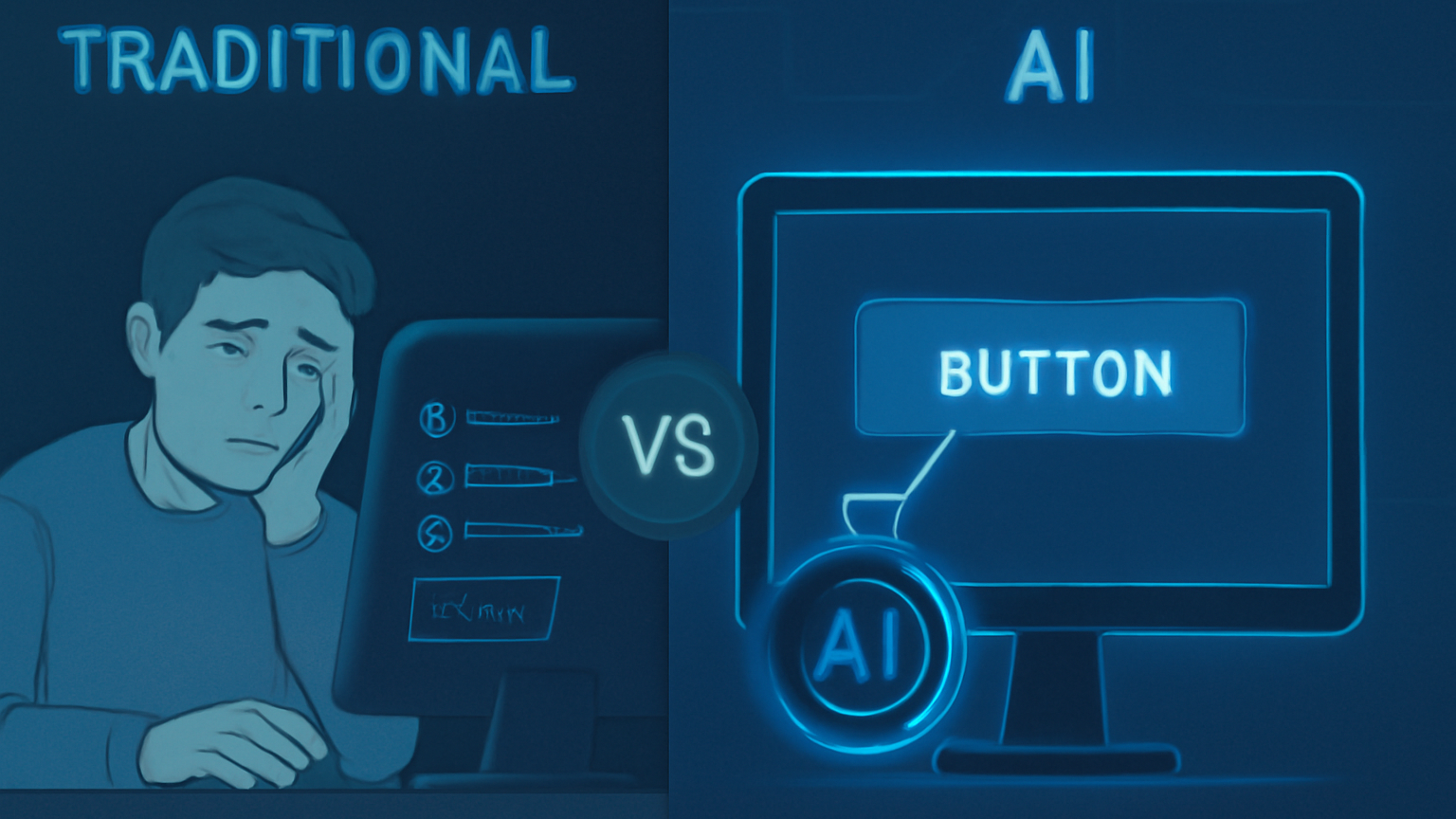

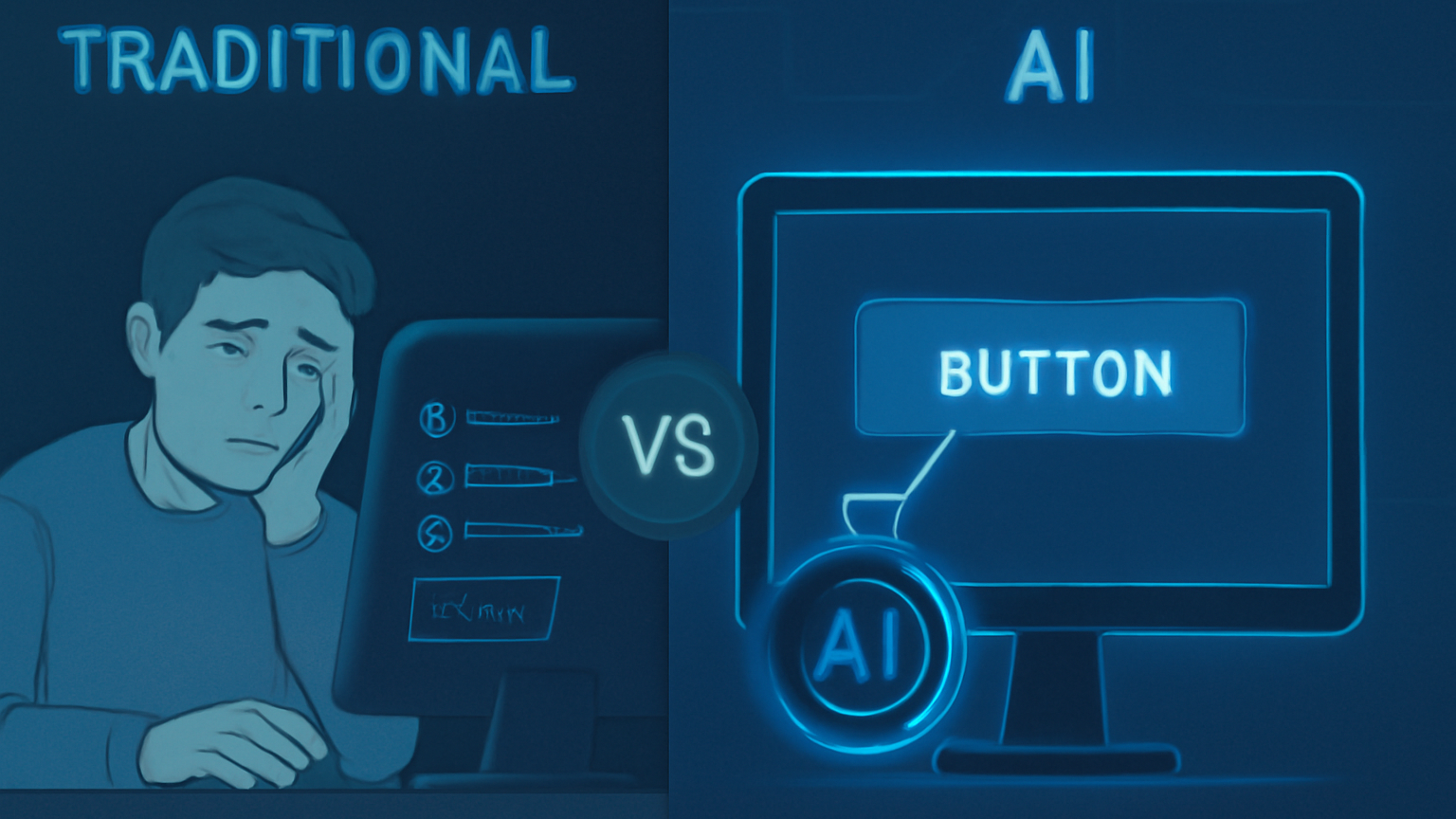

Traditional vs AI Visual Testing: Which One is Better?

Traditional QA has always been built around rules: click this, fill that, submit here. It’s good at confirming whether something works, but it’s blind to how something looks; AI visual testing isn't.

Instead of checking only functionality, it checks the interface visually, almost like a human eye, but without fatigue or bias. It compares current screens to baselines, flags even the slightest shift, and does it across platforms without slowing down release cycles.

|

Traditional Testing

|

AI Visual Testing

|

|

Focuses on functionality (clicks, flows)

|

Focuses on both functionality and visual accuracy

|

|

Relies on coded assertions

|

Relies on image comparison and pattern recognition

|

|

Misses subtle UI shifts

|

Detects pixel-level inconsistencies automatically

|

|

Needs manual validation for visuals

|

Automates visual checks across browsers/devices

|

|

Limited scalability for design accuracy

|

Scales easily across platforms and releases

|

The Science Behind Pixel-Level Precision of AI

AI visual testing works like an obsessive proofreader who never blinks. It doesn’t skim through the page. It zooms into every letter, every pixel, every line. Under the hood, it blends image recognition with machine learning to detect changes no human would register in a routine test cycle.

Instead of relying on hand-written assertions (“the button should be blue”), AI compares snapshots of the interface across versions. It learns the baseline, then flags even a single pixel deviation when something strays. It doesn’t stop at colour. It tracks alignment, spacing, font rendering, and dynamic elements that often slip through unnoticed.

Some of the mechanics:

■ Pattern analysis: The AI identifies visual elements and groups them logically.

■ Baseline comparison: Each release is measured against previous versions for subtle shifts.

■ Context awareness: It learns to ignore acceptable changes (like intentional updates) and highlight unexpected ones.

■ Cross-environment checks: Different browsers or screen sizes often expose flaws; AI normalizes this noise and zeroes in on actual bugs.

The result is accuracy that manual testers can’t sustain. Humans get tired. AI doesn’t. And when teams are already running web automation, mobile automation, and desktop automation, precision at this level ties visual quality into the same QA fabric.

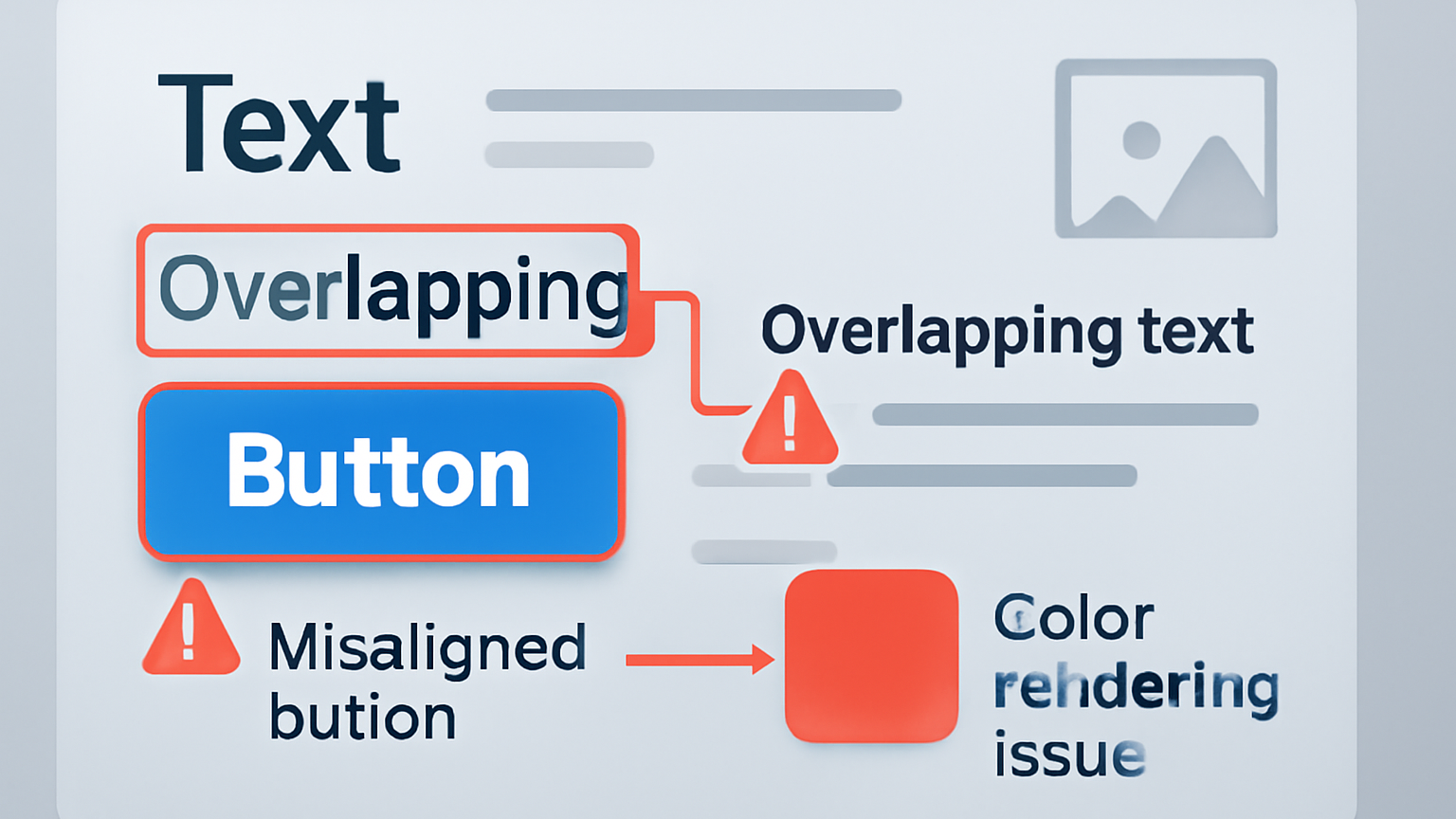

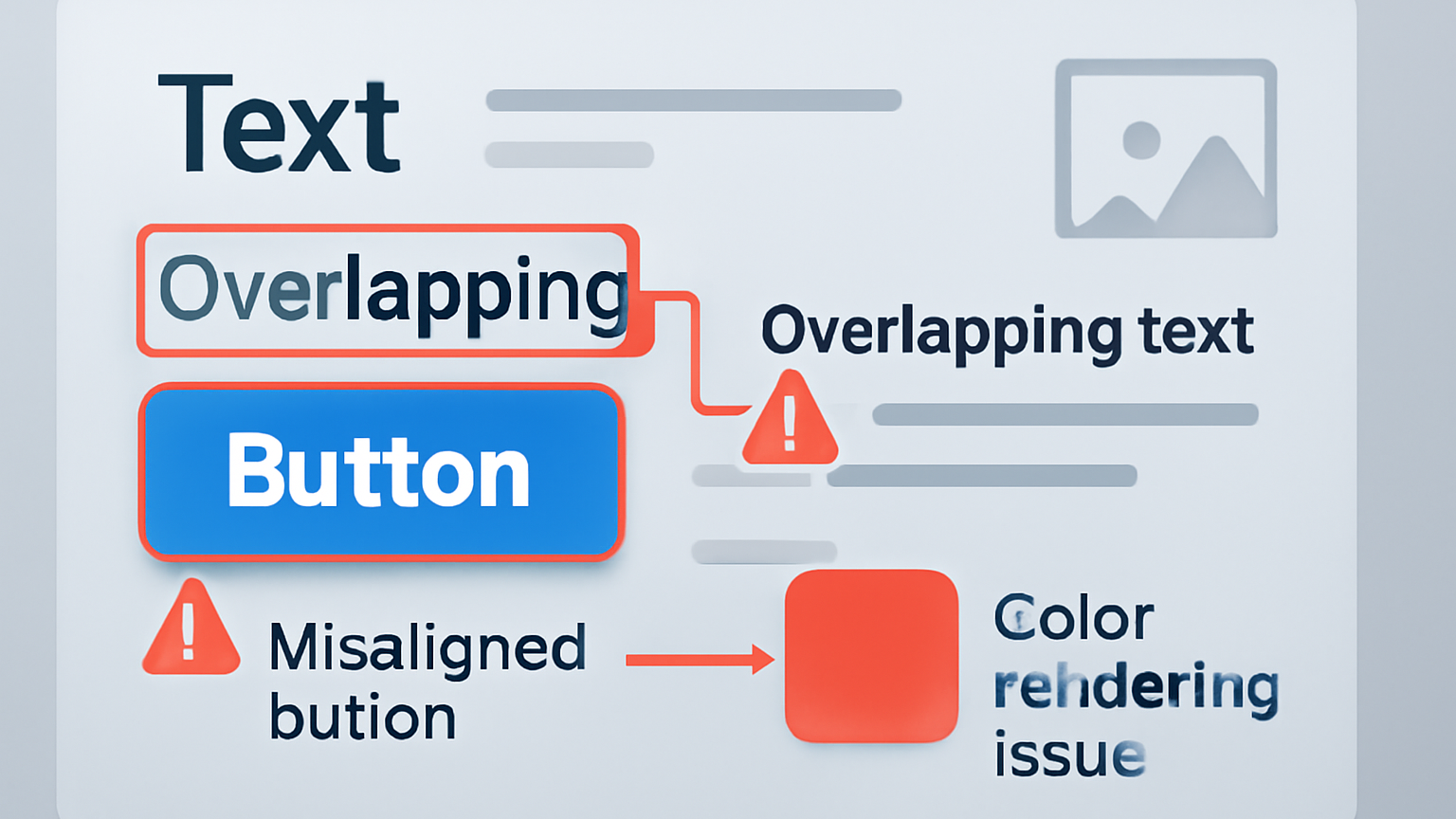

Common UI Bugs Caught Only by AI Visual Testing

You’d be surprised how many issues traditional tests will never catch. Functional checks may pass, but the experience still breaks. Typical UI bugs AI visual testing detects are:

-

Buttons are shifting slightly after a new CSS rule is applied.

-

Text cut off in smaller viewports or different languages.

-

Inconsistent font rendering between browsers.

-

Images stretched, compressed, or poorly scaled.

-

Colour mismatches breaking brand consistency.

-

Unexpected whitespace that pushes layouts off balance.

-

Icons are appearing distorted on high-DPI screens.

-

Overlapping menus or dropdowns that hide content.

-

Subtle contrast issues that affect accessibility.

-

Elements disappearing only in specific environments.

These may sound small. But small bugs pile up. And when users start bumping into them, they leave. AI catches them before they ever do.

Final Thoughts

Good design is invisible. When everything looks right, users don’t notice—they just flow through the product. But when something looks wrong, even slightly, it pulls attention away from the experience. AI visual testing isn't a luxury anymore. It’s the difference between products that feel professional and those that feel sloppy.

If your QA team already runs automated user testing, expanding into pixel-level checks is the natural next step. Platforms like ZeuZ bundle it with all the features you’d expect from a test automation platform and more. The sooner you adopt it, the sooner your users stop spotting what your QA team missed.